Using Real-Time Streaming to Power Etsy's Offsite Ads

At Etsy, we’re focused on connecting our more than five million sellers with buyers around the world. Etsy sellers have nearly 100 million unique vintage and handcrafted items available on our marketplace, and making those goods visible across the wider Web is one of the most important ways we can help buyers find them. Etsy’s Offsite Ads program enables our sellers to advertise across major sites like Google, Facebook, Bing, and Pinterest (to name a few). It’s a complex operation: We run Offsite Ads on multiple platforms in twelve countries (twelve countries for Google, six each for Facebook and Bing), and all these catalogs have to get their own copies of each listing we place, to ensure that the ads are always showing correct translations and the right pricing and shipping information. Additionally, we have to keep those twenty-four catalogs, each with tens of millions of listings, in sync as our sellers make changes throughout the day. All of this happens while our system needs to take into account user data and privacy choices. This means a system capable of efficient, near real-time updates; if an offsite ad doesn’t match what a buyer would view browsing the listing directly on Etsy, that’s a bad experience for everybody.

The Good, The Bad and The GPLA

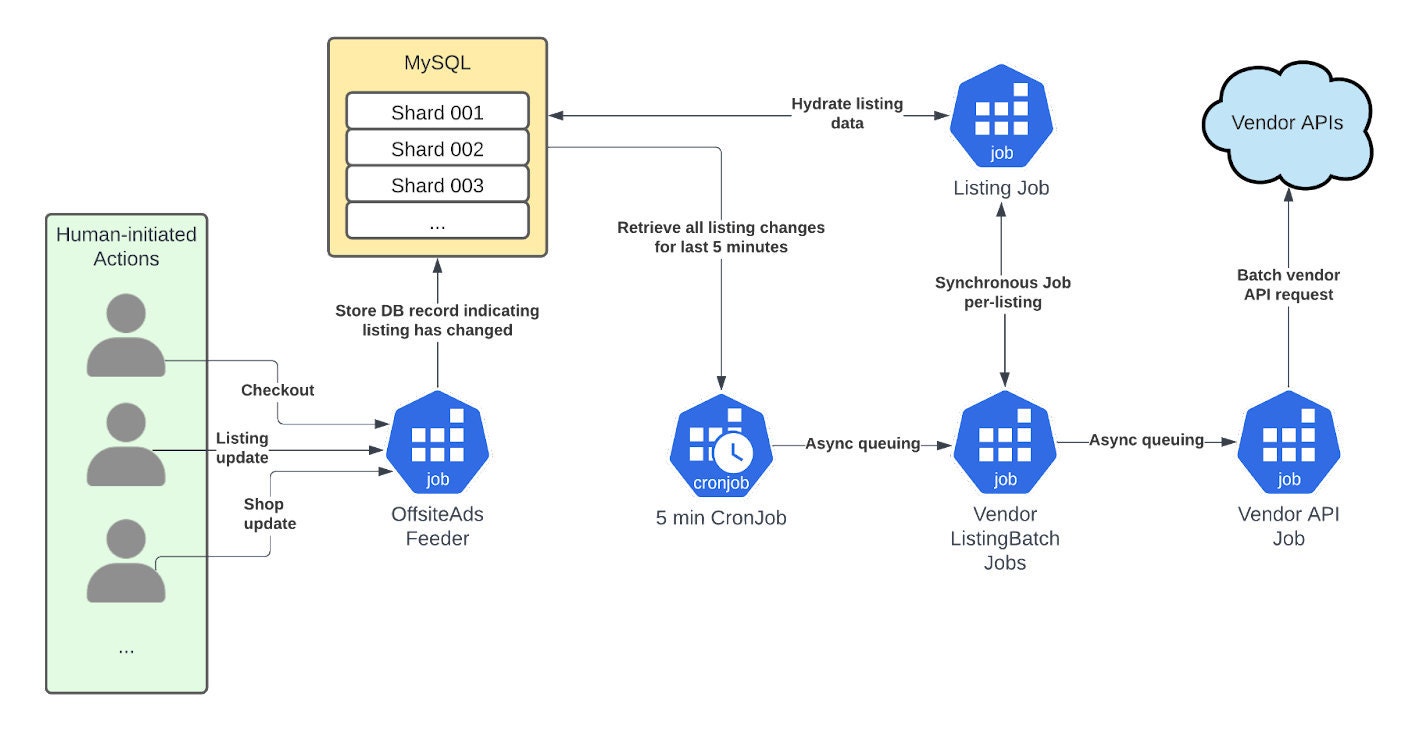

Historically, Etsy syndicated eligible listings to our partnering vendors through Google’s Product Listing Ads on Etsy’s internal integration system known as “GPLA”. Etsy built its GPLA integration in 2012, and was based on asynchronous background processing. Whenever we needed to update our third-party vendors – when sellers changed their listings, when items got purchased and became unavailable – GPLA would kick off a job to execute the update. The job would check to see if this listing change was worthy of updating offsite ads, and if so, would store a new record in a database table indicating this listing needed to be resyndicated. Another job, running every five minutes, queried for any records inserted since the last run and then would generate a new Google Product payload per listing, and finally queue up a final job to send one or more giant JSON blobs containing up to 300 changed listings to Google's Batch Product API to propagate the changes. GPLA was built with this architecture due to the limitations of Etsy still being hosted in our own data centers. We were limited to only using technologies that had already been provisioned, tested and made available to Etsy engineers.

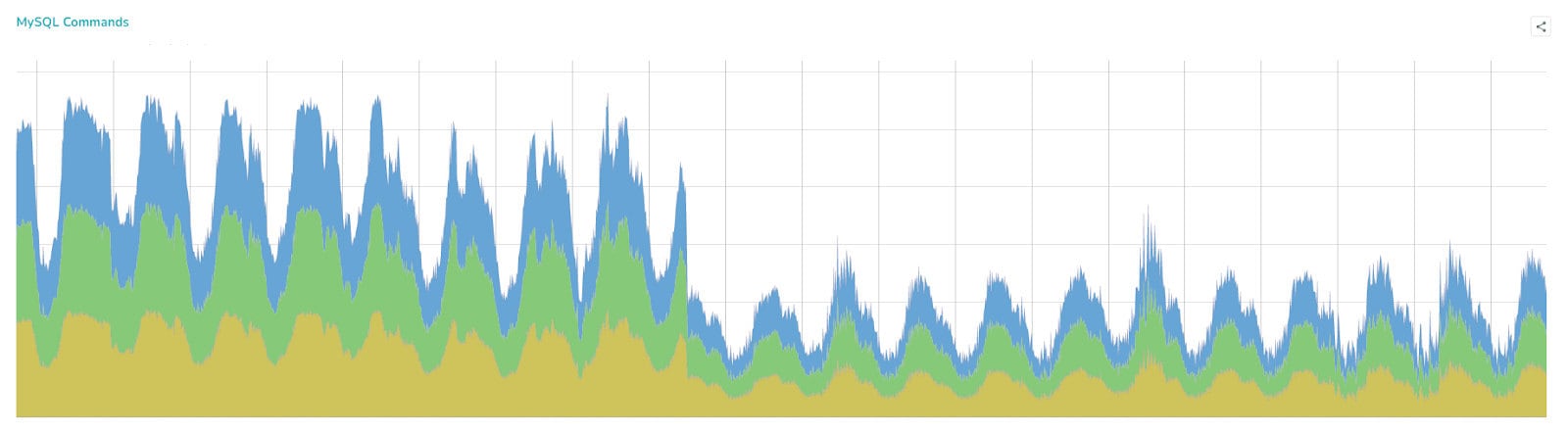

GPLA became synonymous with “offsite ads” at Etsy. As Etsy grew, and as our sellers added more and more great listings, we wanted to show those listings off in more places around the Internet to drive traffic, so we extended GPLA: to Facebook, Bing, Pinterest, and a few other smaller vendors. Each extension increased the size of the table we used to track changes, since we had to store new records for every country and vendor combination served. Every added vendor meant added code complexity, and over time the system became harder to maintain and harder for our developers to work with. Factor in the significant growth in our listings catalog, and GPLA had become a major infrastructure cost. At the system’s peak in mid-2020, it accounted for a whopping 1.5 billion logs written per day, 40% of all writes to our databases, and about 50% of all queries to our databases. GPLA’s query overhead at times was greater than that of people browsing Etsy.com and the Etsy apps combined.

Surely, in the modern age of cloud computing, we could create something more efficient.

Enter Offsite Listing Feeds

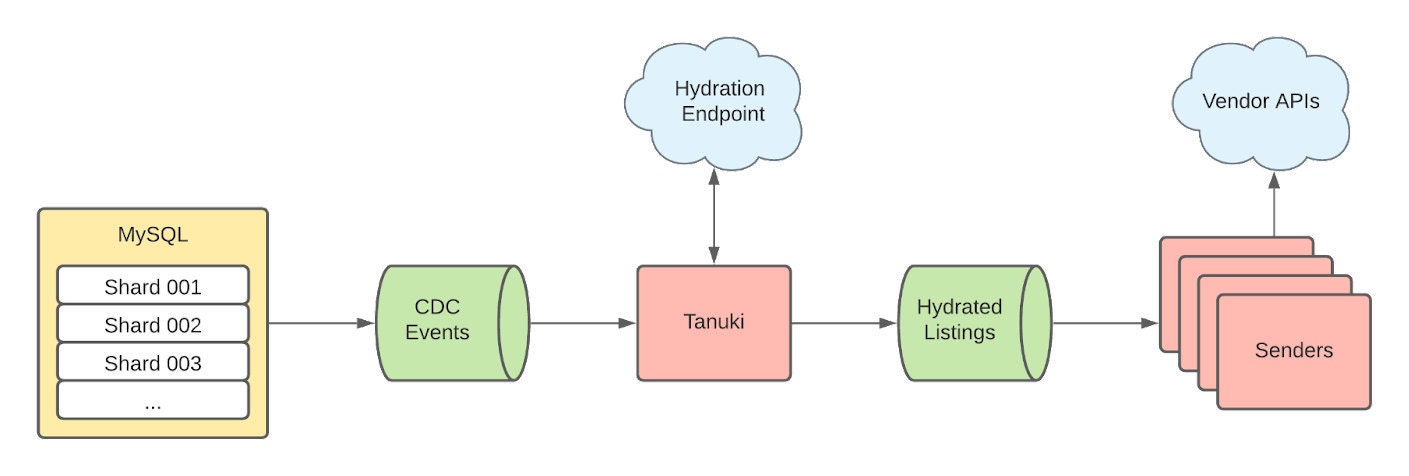

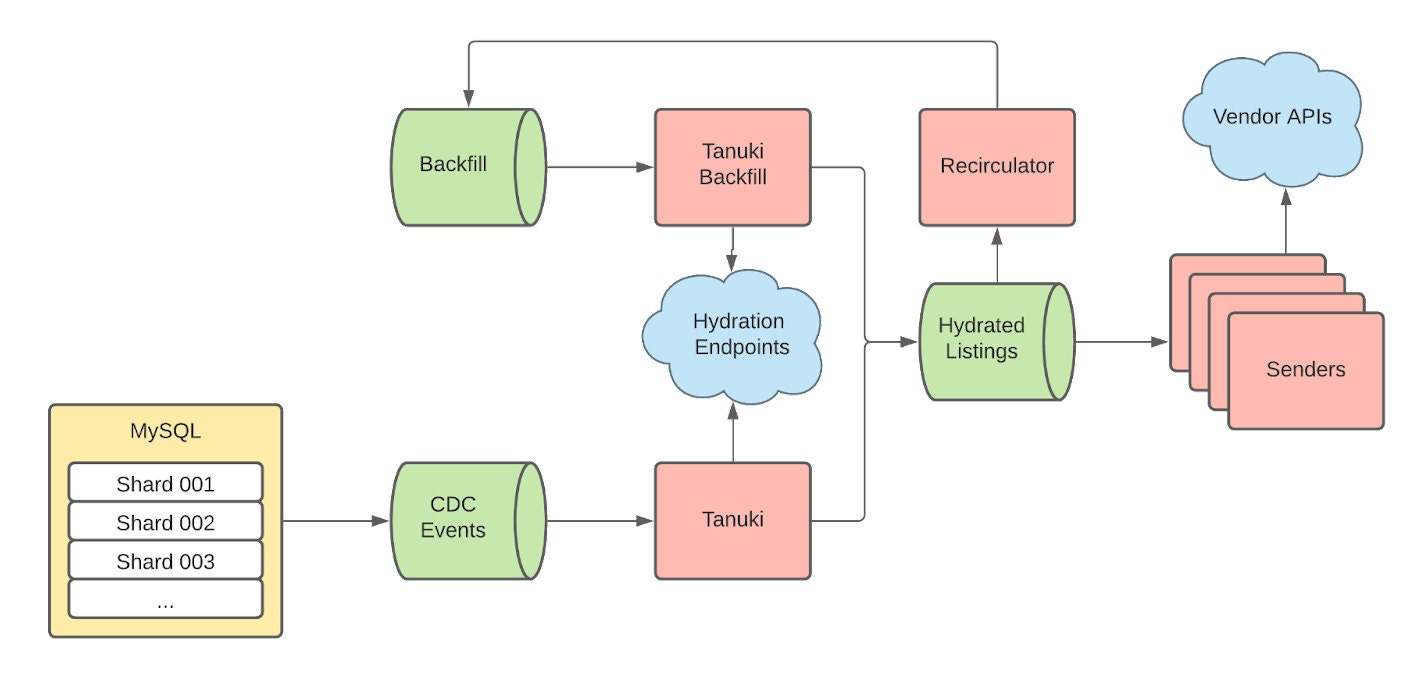

In early 2020, we set out to build a product that would completely replace Etsy’s GPLA. The new system, called Offsite Listing Feeds (OLF), is a reactive architecture that features real-time streaming built on top of modern technologies like Change-Data-Capture (CDC), KafkaStreams, and Kubernetes. With Etsy’s streaming data team managing our Kubernetes cluster, the OLF team could focus our efforts on the nitty-gritty details of constructing the pipeline.

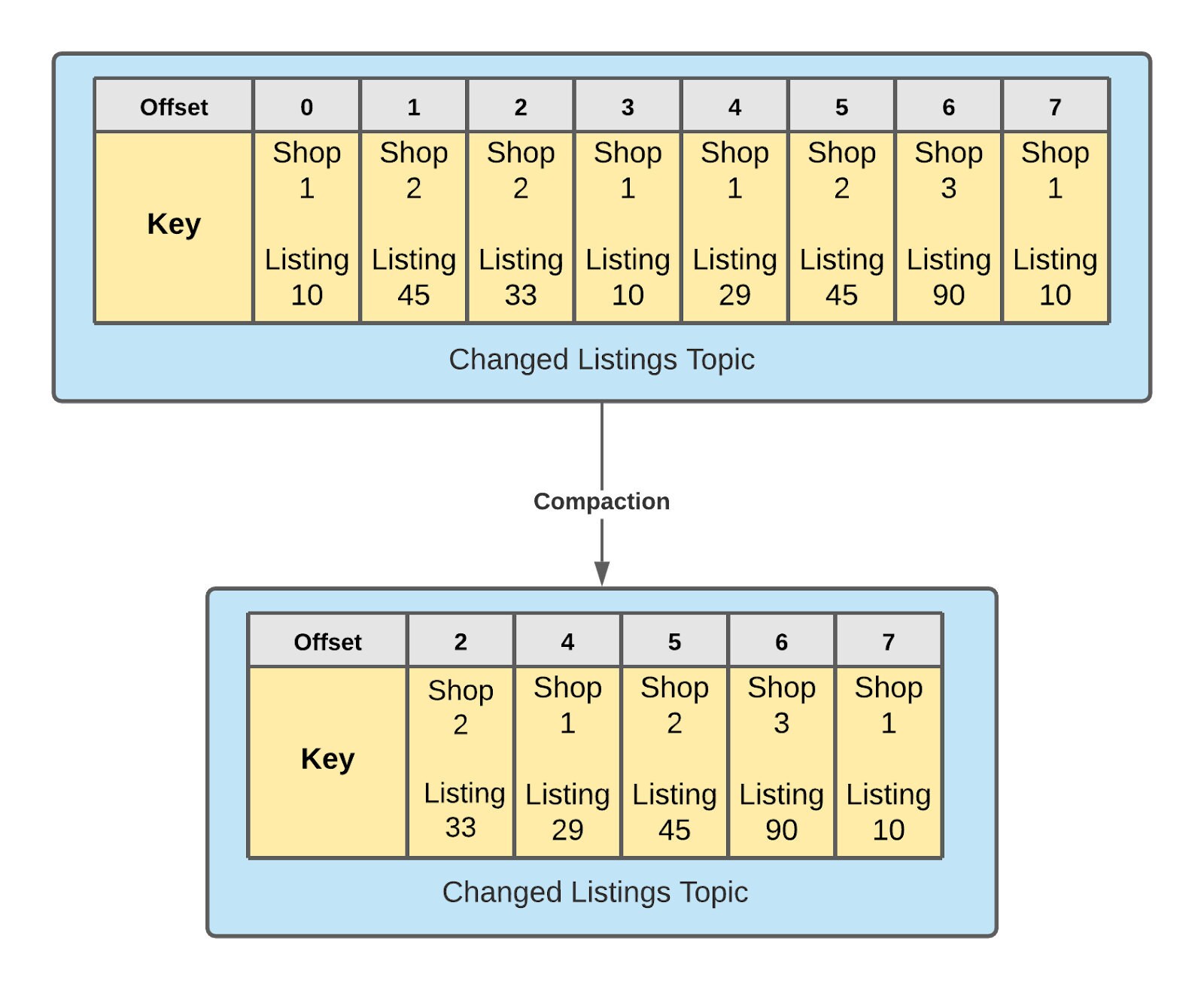

Our pipeline starts with the sharded MySQL databases that store all of Etsy’s listing data. We only have to watch a small subset of those tables to know when a listing has changed. When CDC monitoring notices a record being modified, that record gets emitted onto a Kafka topic. Similar updates are compacted: for example, if a seller changes a listing’s price three times in quick succession, we can discard the first two updates and take interest only in the final one. This helps reduce the number of extraneous updates moving through the pipeline.

The next stage of the pipeline makes use of Tanuki, an in-house streaming system built on top of Kafka. Tanuki was created by our search platform team to allow them to update search indexes in real time as listing data changes. The system, based on record-at-a-time processing, is designed to be extensible, and provides pre-built solutions for common patterns while also letting teams build out custom pipelines. When we receive a CDC update, Tanuki executes an API request to our hydration endpoint, one for each affected listing. That endpoint is where the bulk of OLF’s work happens. From a Shop ID/Listing ID tuple, the hydration endpoint returns all of the information that any of our vendors downstream will need to know. This data comes from a variety of sources: our MySQL shards, datasets, and Etsy’s machine learning platform.

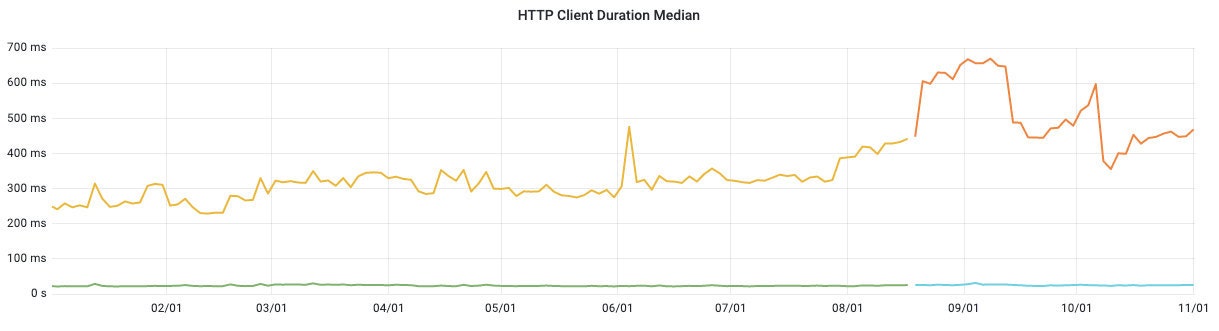

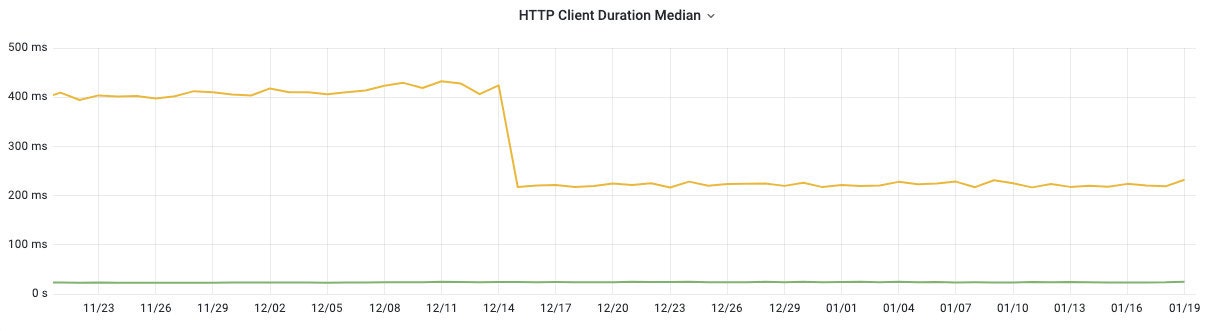

The first version of our OLF pipeline relied on a single synchronous API endpoint. But as the pipeline grew in responsibility, as we integrated more systems and began handling more complex data, performance of the hydration endpoint began to suffer dramatically. Over the past year, median response time more than doubled, from 200ms to 450ms. As response time increased our maximum throughput kept dropping: we could only process so many records per second. It was an unsustainable situation. To address the problem, we refactored the hydration endpoint into nine smaller endpoints, each tasked with gathering a subset of our data. Tanuki still only makes calls to a single API endpoint, but that concurrent endpoint then fans out to the smaller ones to offload the work. This new architecture allows us to continuously add more data into the hydration endpoint as needed, without decreasing performance on every additional query. The hydration endpoint is now only as slow as the slowest subsidiary endpoint.

The final stage of the OLF pipeline are the “Sender” applications. The Senders are where we integrate with the 3rd party APIs such as handling authentication, payload creation, request batching, and, ultimately, syndication to the vendors. Every catalog that OLF powers is treated as a separate application on our Kubernetes cluster which enables graceful failures at the catalog level. This also enables OLF to have fine-grained control over the number of pods allocated to any given catalog. Catalogs that have a high amount of throughput – like the U.S. catalogs – generally run with more pods than catalogs that have lower listing update throughput. This catalog-level granularity allows us to save costs, and make sure our pipeline is running as efficiently as possible.

To reduce duplicated data in our hydration endpoint, we try to make sure that the format of the hydration API is vendor-agnostic and instead rely on formatting logic within the Sender applications to convert our hydration API data format into the format our vendors expect. With this architecture, enabling new vendors onto OLF is a simple task that primarily involves 3 steps: writing an API integration class, formatting the hydrated listing data into the vendor’s expected format, and then testing/ramping. For vendors that already have all of that code written, we’re able to ramp up new markets powered by OLF easily through the use of config flags. The Sender applications make heavy-use of config variables which define things such as vendor, country and language that are enabled for that specific application variation. This ultimately reduces developer toil when we ramp a new catalog onto this system since it requires no code modifications, just creating a new configuration file with the correct values defined and waiting for Kubernetes to create the pods to begin processing messages from the Hydrated Listings Kafka topic.

Integrated Machine Learning

In addition to loading all seller-provided attributes, we also rely on an Etsy-built knowledge graph, powered by machine learning, to infer attributes where they’re missing: colors and materials, for instance, or relevant hobbies and occasions, among many other things. OLF defaults to seller-provided values first, because ultimately our sellers know their listings best, however the knowledge graph is a useful supplement where relevant attributes haven’t been provided by a seller. The point, after all, is to give vendors all the data we can so that they can show our sellers’ products to the people most likely to want them.

Machine learning models also protect our sellers and buyers by identifying listings that might lead to a fraudulent transaction or otherwise violate key policies. A score between (-∞, ∞) is generated for every listing, and we have thresholds set for every vendor that OLF powers to help ensure we offer both our buyers and sellers the best experience shopping on Etsy using Offsite Ads. We launched this fraudulent listing model in April of 2021, and have seen a steady decrease in fraud on Etsy attributed to Offsite Ads.

Recirculator Hydration

Since Etsy serves markets outside of the US, we have to constantly take into account currency conversion rates between the listing’s native currency and all other currencies in the world. We track these conversion rates in a MySQL table, updated nightly. And though we could simply add that table to the ones CDC already monitors for us, the result would be a huge influx of record updates whenever rates got changed. We would end up re-syndicating nearly our entire catalog all at once, every night. As it happens, our team values sleep, and not having to routinely interrupt it to handle drastic increases in lag. So we had to come up with a solution to spread out these conversion-rate updates across the day.

Fortunately, Kafka offers a native feature called windowing. Kafka windowing enables an individual record to be held for a preset amount of time before being emitted to an output topic. In our pipeline, after a listing has been hydrated, an additional Kafka app initializes a 23-hour long window for that specific Listing ID. If any CDC updates come through the pipeline during the day (say, the seller updates the listing’s title), we fully hydrate the listing and extend the window for another twenty-three hours. Additionally, we store that full hydration JSON into a BigTable cache. If no further CDC change happens on the listing in that period, the window closes and our recirculator app emits the record to our Backfill Kafka topic. Tanuki will pick up the record from there and hydrate it with a slimmed-down hydration endpoint that returns the price from the new conversion rate. We merge that smaller API response with data from our BigTable cache and flow it through the Hydrated Listings topic, where our senders treat this hydrated listing no differently than they would a CDC update, syndicating the listing to our vendors if it has changed.

We specifically chose twenty-three hours for a few reasons. First, we wanted to ensure that there would be drift over time, so that listings wouldn’t always be syndicated at the same time every day. This becomes useful for us if our API ever is momentarily unavailable and Tanuki starts to accrue lag. Our pipeline’s natural tendency to spread these updates out over the day allows any such lag to be dispersed over a slightly longer period, ensuring our pipeline isn’t stuck with an uneven distribution of updates to make every day. And second, had we gone with twenty-four hours, there was a slight chance that some listings would hydrate at exactly the same time as our currency changes, meaning ad prices would always be incorrect for those listings. This specifically becomes an issue with Google, which randomly performs validations on the data that we provide by comparing it against what a user would see on our site. If Google thinks it’s found a price discrepancy, it can flag a product for review.

Migrating onto OLF

Performing a large migration of a business-critical system always has inherent risks – missing a piece of data, system performance being slower than expected, or things just not behaving quite right. Given how important Google Ads are to our sellers’ ability to market their listings and grow their shops, we wanted to be sure we were confident in our migration plan. To gain that confidence in the new OLF architecture, we decided to migrate a vendor that had fewer dependencies throughout Etsy’s codebase, Facebook, onto OLF’s system first. This would give us an opportunity to uncover bugs and performance issues with the pipeline and allow us to remedy those before doing a cutover for the rest of the system.

Performing the cutover for Facebook was easy once the APIs had been implemented into our sender apps. All OLF tuples contain a numeric shop ID value which allows for performing a modulo on the shop ID and comparing that to the ramp-percentage that we were currently at. Doing a slow percentage-based rollout enabled us to closely watch logs that validated the state of listings being syndicated without being inundated with information. To ramp down Facebook’s fork of GPLA, we applied the same modulo-based ramp formula to prevent shops that had been ramped onto OLF from being syndicated by the legacy system. This also allowed us to find shops that we knew fit into each ramp stage and watch those shops in the Facebook catalog to see if the listing data had changed unexpectedly. Over the course of a few days, we were able to ramp every shop onto OLF. Monitoring the Facebook updates for a couple of weeks gave us the confidence we needed to start migrating GPLA. We replicated this roll-out strategy to start syndicating Google updates via OLF once the Google APIs had been integrated into our sender applications.

Conclusion

In the year and a half that OLF has been in place, we’ve seen a ton of benefits: graceful failure and recovery when a vendor’s API goes down or is otherwise unreachable, reduced query volume leading to massive performance improvements on the MySQL databases, greater ease of development overall, and less developer toil. The query load on some of our MySQL databases has been reduced by over 50%, giving us a significant boost in headroom. Thanks to OLF’s reactive, real-time streaming architecture, we’re able to continue to maintain the success of the Offsite Ads program and help our sellers grow their business by bringing them buyers.